As our applications become universally available on more and more platforms, consistent and reliable API communication becomes increasingly vital in order to move quickly. GraphQL is an outstanding way to provide expressive access to your data schema with a built in language for consistent contracts between your API and its clients.

GraphQL gives your front end developers rich tools for understanding the schema and selecting just the data they need. Using it in a sound, type-safe way can be challenging, however - especially in a strict TypeScript environment. My team dealt with maintaining large sets of verbose and error-prone type interfaces and gql expressions to ensure that we could keep our types as solid as possible.

GraphQL also provides a great platform for highly optimized performance, giving you everything you need to plan high-performance joins across related tables and implement efficient batching logic for your data. This is not handed out for free, however, and you often have to do a great deal of work to take advantage of it. What's more, GraphQL's approach to related data types can easily run into the same kind of N+1 query problems that ORM's regularly deal with. If you ignore this, you can end up in a performance nightmare with no way out but to refactor.

This series of articles will cover how to build a high-performance, type-safe, easy-to-consume API using Node, TypeScript, and PostgreSQL. Today's Part 1 will cover the goals of the architecture and the first two tools that we'll use - Knex and PostGraphile. Part 2 will walk through how to extend the automatic schema and build business logic, and it will introduce GraphQL Code Generator to support consistent and accurate type-safe access to your data. Part 3 will introduce a React front end and show how to generate automatic types and components to use with Apollo Client.

Code-Generated Bliss

I've been on a quest to make it easier to use GraphQL in a reliable and high-performance way using Node and TypeScript. I tried a number of different approaches, and I settled on introspection and automatic code generation as the best strategy for using GraphQL effectively with as little effort as possible.

To start off, I want to automatically derive a default set of resolvers that automatically plan efficient joins and batch queries for me so I can avoid the N+1 problem. I've managed SQL joins manually for deeply nested sets of related tables in the past, and the level of effort isn't worth it when there are tools out there that can help you plan queries automatically.

This isn't always the case, however, and there are sometimes situations where overriding the default and manually optimizing a query is best. I love solutions that are automatic by default yet give you the freedom to override that and attempt to do better by hand.

Beyond the mechanics of managing data, I also want to automatically derive the TypeScript types to describe that data and the kinds of operations I can perform on it. With the expressive and dynamic nature of GraphQL's responses, which can vary widely depending on how your API client calls a particular Query or Mutation, it can be tedious and fragile to write out all of the TypeScript types for all the different operations your application uses by hand.

Thankfully, there's a way to do each of these things consistently and reliably, without a Herculean effort!

Automatic PostgreSQL

My database of choice for over a decade now has been PostgreSQL. It's a one-stop-shop for both traditional relational and alternative schema-free data with powerful security features, rich clustering and replication support, and outstanding performance.

There are a few great tools out there for automatically generating a GraphQL data access layer for a Postgres database, like Prisma or Hasura. My favorite tool is one that provides a high-performance interface to my data in a way that integrates smoothly with my core Node architecture - PostGraphile. It's based on a "database-first" perspective that uses your Postgres schema as the single source of truth for your core GraphQL types, operations, and resolvers. It's easy to extend without resorting to things like schema stitching or federation, and I've found it to be the best way to build an API that is easy to maintain while avoiding pitfalls like the N+1 problem.

Generating things automatically doesn't mean you don't have to know your database, however. On the contrary - the point of PostGraphile is to allow you to stay closer to your database, implementing as much as possible with Postgres and then deriving as much as possible from it. If you decide to move away from PostGraphile, you still have a carefully crafted database schema that you can use with many other developer ecosystems, in Node and beyond.

To make the process of working within this structure easier, I've published a set of tools under the npm package @graft/server.

Setting up your Schema

Since PostGraphile is database-first, the first thing we need to do is set up our database and give it some structure. To do this, I like to pull out an old standard of mine for data migrations in Node - Knex. I'm using it with TypeScript, so I'll install both knex and ts-node to my project. Then, I can set up a knexfile.js that looks like this:

require('ts-node/register/transpile-only')

const readFile = require('fs').readFileSync

const dotenv = require('dotenv')

const parseDbUrl = require('parse-database-url')

dotenv.config()

const dbUrl =

process.env.DATABASE_URL ||

'postgres://app_root:password@localhost:5432/app'

const dbConfig = parseDbUrl(dbUrl)

const ssl = Boolean(process.env.DATABASE_SSL) || Boolean(dbConfig.ssl)

const sslca = process.env.DATABASE_SSL_CA || dbConfig.sslca

module.exports = {

client: 'pg',

connection: {

host: process.env.DATABASE_HOSTNAME || dbConfig.host,

database: process.env.DATABASE_NAME || dbConfig.database,

user: process.env.DATABASE_USERNAME || dbConfig.user,

password: process.env.DATABASE_PASSWORD || dbConfig.password,

port: process.env.DATABASE_PORT || dbConfig.port,

ssl: Boolean(ssl || sslca)

? sslca

? {ca: readFile(sslca)}

: true

: undefined,

},

pool: {

min: process.env.DATABASE_POOL_MIN,

max: process.env.DATABASE_POOL_MAX,

idle: process.env.DATABASE_POOL_IDLE,

},

migrations: {

tableName: 'knex_migrations',

},

}This file does a few things. First, it reads any local .env files it finds using the dotenv libray. Then, it parses the DATABASE_URL with parse-database-url so that we can use concise, one-line connection descriptors. Then, it uses the parsed config to populate the Knex config values. Each value can be individually set or overridden by environment variable, as well.

Now we can write some migrations. Create a migrations folder in your project, and start out with an initial setup migration:

/* tslint:disable await-promise */

import Knex from 'knex'

import {KnexUtils} from '@graft/server'

export async function up(knex: Knex) {

await knex.raw('CREATE EXTENSION IF NOT EXISTS "uuid-ossp";')

// timestamp updates

await knex.raw(`

CREATE OR REPLACE FUNCTION set_updated_at()

RETURNS TRIGGER AS $$

BEGIN

NEW.updated_at = CURRENT_TIMESTAMP;

RETURN NEW;

END;

$$ language 'plpgsql';

`)

// ignore the migration tables

await knex.raw(`COMMENT ON TABLE knex_migrations is E'@omit';`)

await knex.raw(`COMMENT ON TABLE knex_migrations_lock is E'@omit';`)

// access roles

await knex.raw(

`ALTER DEFAULT PRIVILEGES REVOKE EXECUTE ON FUNCTIONS FROM public;`

)

await knex.raw('DROP ROLE IF EXISTS app_user;')

await knex.raw('CREATE ROLE app_user;')

await knex.raw(`GRANT USAGE ON SCHEMA public TO app_user;`)

await knex.raw(`GRANT app_user TO app_root;`)

}

export function down(_knex: Knex) {

throw new Error('Downward migrations are not supported. Restore from backup.')

}This migration does some role setup for PostGraphile, differentiating between an app_root role that the process runs under, and the app_user role that our HTTP requests will run under. It also creates a set_updated_at for easy updating of the updated_at field that Knex can automatically create.

PostGraphile can handle JWT authentication for you, but I like to take full control over the process and use koa-jwt. To do this, I create a user table with a username column that should match my auth provider's sub value. I use a couple of tools that I bundle with with my @graft/server library so that I can take advantage of UUID-based ids, and easily use the set_updated_at function that I defined previously.

Extend the up function in your migration with this:

// user

await knex.schema.createTable('user', table => {

table.comment('User authentication information')

KnexUtils.primaryUuid(knex, table)

table.timestamps(true, true)

// fields

table

.string('username')

.unique()

.notNullable()

.comment(`The User''s login id - usually their email address.`)

table

.boolean('is_active')

.comment(`If false, the User is suspended.`)

.defaultTo(true)

})

await KnexUtils.updateTimestamp(knex, 'user')

await knex.raw(

`GRANT SELECT, INSERT, UPDATE, DELETE ON TABLE "user" TO app_user;`

)

await knex.raw(`ALTER TABLE "user" ENABLE ROW LEVEL SECURITY;`)

await knex.raw(`

CREATE POLICY user_same_user_policy ON "user"

USING (username = current_setting('jwt.claims.sub'))

WITH CHECK (username = current_setting('jwt.claims.sub'));

`)Now we're starting to take advantage of Knex's query-building tools, following them up with some raw SQL to secure the new table. The CREATE POLICY call sets two distinct checks - one for reads and one for writes. In both cases, we're checking to see if the jwt.claims.sub setting in Postgres matches the value of the username column in order to determine if the user can access or manipulate a given row. This automatically limits a user to only their own user row.

Now, we can go ahead and run our migration! Add a few entries to your package.json "scripts" definition:

"db.migrate": "knex migrate:latest",

"db.reset": "dropdb --if-exists app && createdb app -O app_root && yarn db.migrate",

"db.shell": "PGPASSWORD=password psql -h localhost -U app_root app",Then, run a few commands to set up the database and user locally. If you don't have a local superuser, set that up first for convenience. Don't do this on a server - just on your secure local development machine.

sudo --user postgres createuser --superuser $USER

createdb $USER -O $USERThen set up a user for your app (replacing "app" with whatever name you want). Again, don't set it up this way on a server.

createuser --superuser app_root

createdb communityfunded --owner app_root

psql -c "ALTER USER app_root WITH PASSWORD 'password';"Now, you can run your db.migrate script (swapping "npm run" for "yarn" if that's your thing):

yarn db.migrateWith that, you should now have a user table in your database.

Setting up your App

Next, we need to set up the server process that handles low-level HTTP requests for your app. Koa is my favorite choice for this because it supports "nesting doll" middleware, giving you the opportunity to act on both the Request and the Response phase using a convenient async/await syntax. Other popular choices here include Express or Hapi.

To start setting up Koa, import a few dependencies:

import http from 'http'

import Koa from 'koa'

import Router from 'koa-router'

import morgan from 'koa-morgan'

import bodyParser from 'koa-bodyparser'

import chalk from 'chalk'

import jwt from 'koa-jwt'

import jwks from 'jwks-rsa'Then, set up an app, a router, and an error handler:

const app = new Koa()

const router = new Router()

app.on('error', (err: Error, _ctx: Koa.Context) => {

console.error(err)

})Next, configure your JWT settings if you're using koa-jwt like I am:

const jwtCheck = jwt({

// @ts-ignore jwks-rsa types are inaccurate - it returns a SecretLoader

secret: jwks.koaJwtSecret({

cache: true,

rateLimit: true,

jwksRequestsPerMinute: 5,

jwksUri: Auth.jwksUri,

}),

audience: Auth.audience,

issuer: Auth.issuer,

algorithms: ['RS256'],

passthrough: true,

})My Auth value there comes from a Config module which exports the following namespace:

export namespace Auth {

export const jwksUri =

process.env.AUTH0_JWKS_URI ||

'https://app.auth0.com/.well-known/jwks.json'

export const audience =

process.env.AUTH0_AUDIENCE || 'https://app.deterministic.dev'

export const issuer = process.env.AUTH0_ISSUER || 'https://app.auth0.com/'

}Next, I want to set up my PostGraphile options, and a config for the Playground middleware I want to include along with it. My team has been using Apollo Server up to this point, so I continue to make that familiar Pyalground interface available in the same way. I import some more things:

import playground from 'graphql-playground-middleware-koa'

import {PostGraphileOptions, postgraphile} from 'postgraphile'

import {MiddlewareOptions as PlaygroundOptions} from 'graphql-playground-html'Then I set up options to use them:

const options: PostGraphileOptions = {

appendPlugins: Plugins.plugins,

additionalGraphQLContextFromRequest: async (req: IncomingMessage) => ({

user: req._koaCtx && req._koaCtx.state.user,

}),

// @ts-ignore - conflicting error types

handleErrors: Plugins.handleErrors,

pgSettings: Plugins.pgSettings,

}

const playgroundOpts: PlaygroundOptions = {

endpoint: '/graphql',

settings: {

// @ts-ignore - incomplete type

'schema.polling.enable': false,

},

}The IncomingMessage type is one that I've extended for my own use, like this:

import * as Http from 'http'

import * as Koa from 'koa'

export interface IncomingMessage extends Http.IncomingMessage {

_koaCtx?: Koa.Context

}PostGraphile's Koa adapter is what actually attaches the _koaCtx property to the Request object, allowing us to get back to the Koa context from our additionalGraphQLContextFromRequest callback.

The handleErrors callback is pretty simple:

import Debug from 'debug'

import {GraphQLError, formatError} from 'graphql'

import {GraphQLErrorExtended} from 'postgraphile'

export const handleErrors = (errors: readonly GraphQLError[]) =>

errors.map((error: GraphQLError) => {

const formattedError = formatError(error) as GraphQLErrorExtended

// If this is dev, add the stack to the formatted error.

if (Environment.isDev) {

debug(error)

formattedError.extensions.stack = error.stack

}

return formattedError

})The pgSettings callback is similarly simple, but it's important because it's how you get things like a user sub into Postgres and set the app_user role for each request:

export async function pgSettings(req: IncomingMessage) {

const ctx = req._koaCtx

const user = ctx && ctx.state.user

const sub = user && user.sub

return {

'jwt.claims.sub': sub,

role: 'app_user',

}

}Finally, I define error handling middleware for Koa:

export const catchErrors: Koa.Middleware = async (ctx, next) => {

try {

await next()

} catch (err) {

ctx.status = err.status || 500

ctx.body =

err.publicMessage ||

'{"error": "An error has occurred. Please try your request again later."}'

ctx.app.emit('error', err, ctx)

}

}Okay, now we can put it all together into a server pipeline! Here's how I set it up with Koa:

app.use(morgan(Environment.isDev ? 'dev' : 'combined'))

app.use(catchErrors)

app.use(jwtCheck)

app.use(bodyParser())

router.get('/', async (ctx, _next) => {

ctx.body = 'ok'

})

if (Environment.isDev) {

router.get('/graphql', playground(playgroundOpts))

}

router.post('/graphql', postgraphile(Database.url, 'public', options))

app.use(router.routes()).use(router.allowedMethods())The / handler responds with a simple "ok" response for health checks.

The Environment module here is another Config namespace that I expose, based on environment variables. I don't expose the Playground interface if this is not a dev environment.

Finally, I expose the PostGraphile interface on the /graphql endpoint, just for POST requests. If I need CORS, I typically use koa-cors further up the middleware chain to respond to OPTIONS requests.

To run everything, I add this at the end:

const server = http.createServer(app.callback())

server.listen(Server.port, () => {

console.log(

chalk.cyan(

`> Started API on port ${chalk.yellow(Server.port.toString())}`

)

)

})

server.on('close', () => {

console.log(chalk.cyan(`> API shutting down`))

})Again, Server is another Config namespace with application settings - this time for the port to listen on.

Exploring the Fruits of your Labor

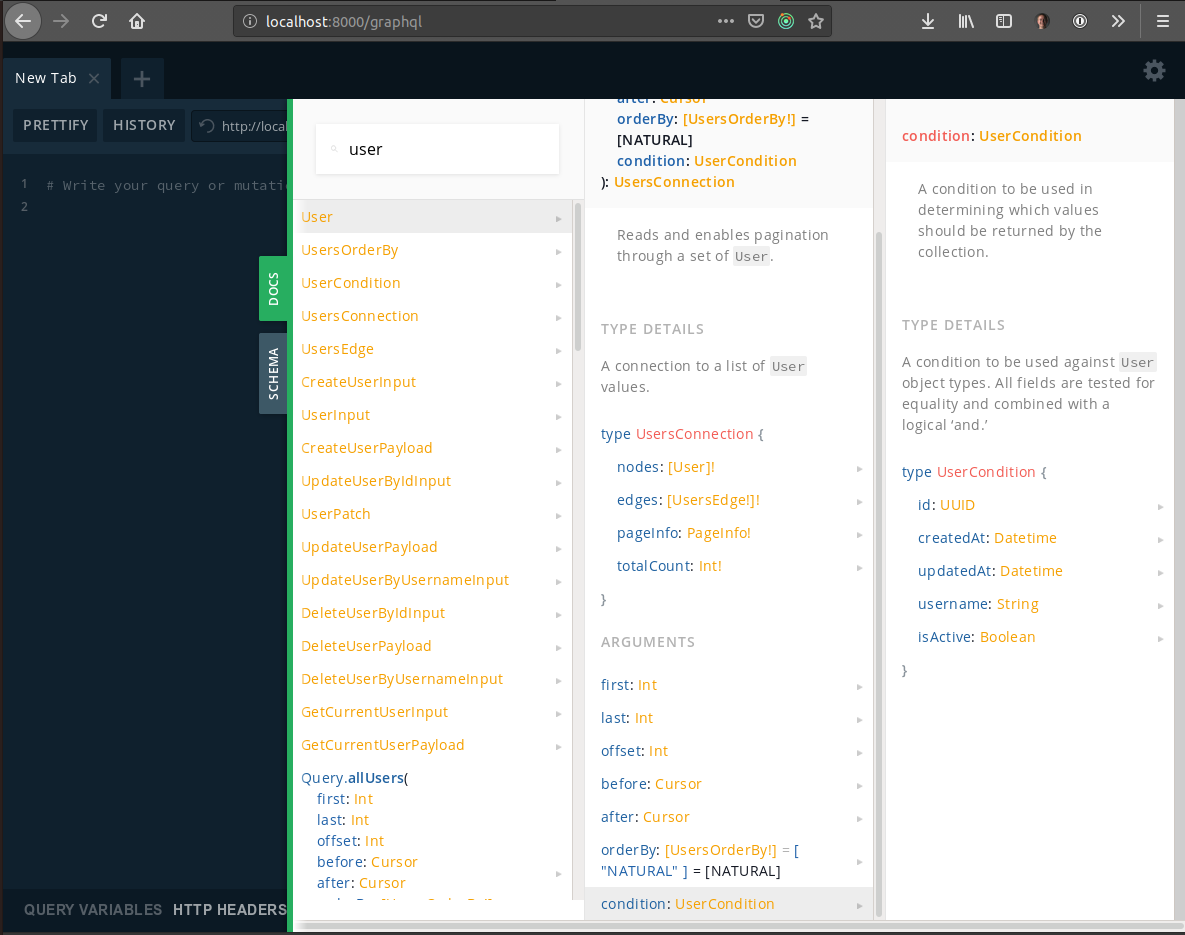

There we have it! If you run yarn dev, you should now be able to visit the local Playground instance at http://localhost:8000/graphql!

With this interface, you can explore the schema and even execute Queries and Mutations, but keep in mind you'll need a valid JWT token set in the "Http Headers" section of the app to run anything with active row-level security.

We're just getting started!

Now that we have a core set of GraphQL operations to work with, it's time to get down to some real business! Next time, I'll cover how to extend the automatic schema and build business logic within PostGraphile plugins, and introduce GraphQL Code Generator to provide consistent and accurate type-safe access to your data.

My strategy is to use the auto-generated schema from within PostGraphile plugins as if I were an external client - using the expressive GraphQL query language to interact with my database using the tools that PostGraphile provides.

In the meantime, you can find me on Twitter @bkonkle. Let me know what you think so far!